Is it Time to Refresh My Rubric? A Checklist When Your Rubric Needs a Tune-Up

Do you have a rubric that you’ve been seeing a lot of lately? Perhaps there’s one rubric you’ve been using to grade multiple submissions this semester and noticed that it isn’t quite as smooth as you’d like.

Grading with a rubric oftentimes gives rise to observations about its limitations: you might notice, for example, that students are struggling in a particular area that isn’t captured on the rubric and you aren’t able to provide feedback accordingly. Or perhaps students seem confused by language in the rubric and that confusion is reflected in their submissions.

In this post, we’ll explore how to determine if your rubric needs a refresh and provide a checklist to help make necessary updates.

Two notes before we get started:

- To keep expectations clear and consistent, rubrics should be updated before they are released to students. It’s okay to work ahead in your course shell and modify rubrics for assessments in coming weeks so long as those rubrics have not yet been published.

- For consistency and fairness, any changes to a rubric should be done in consultation with the teaching team in multi-section courses.

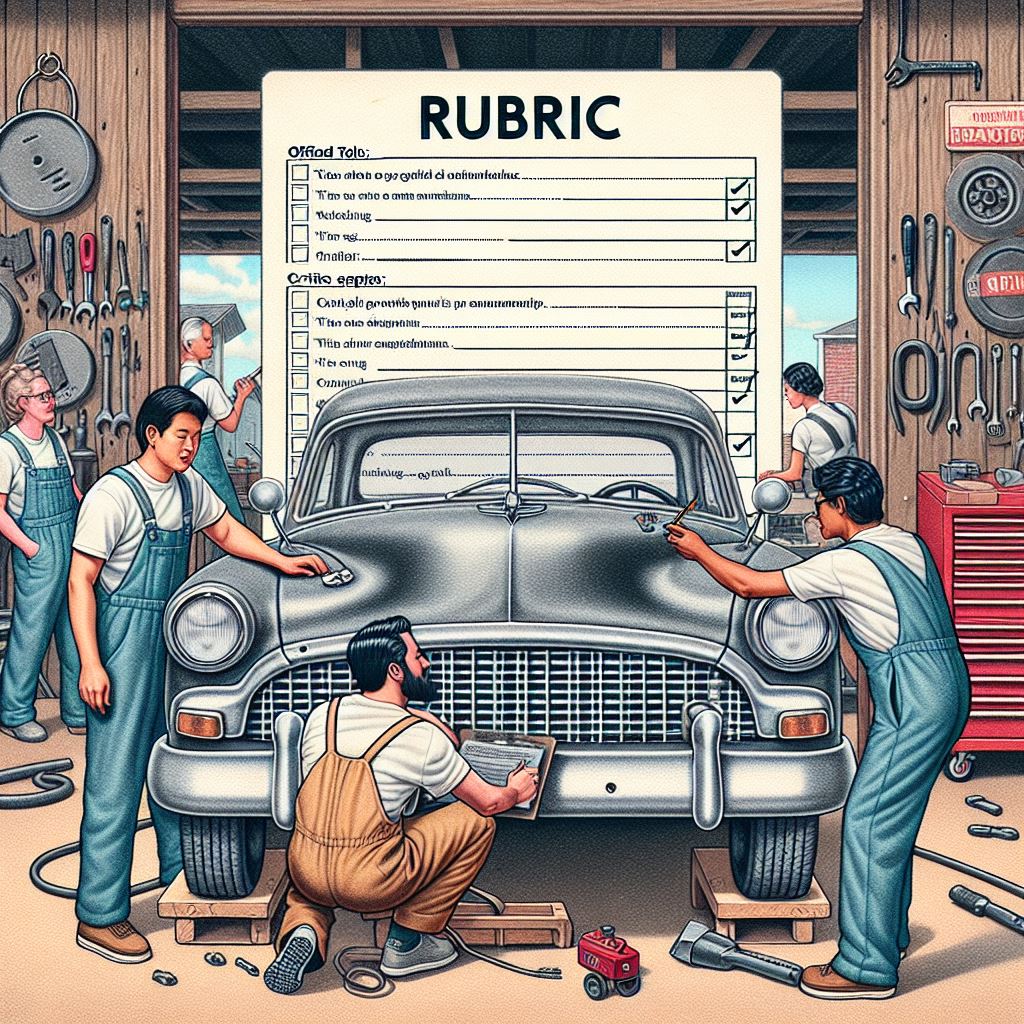

The checklist

- Alignment with assignment instructions and course learning outcomes

- Compare criteria: Does the rubric assess what students are asked to do in the assignment? Or does the rubric include elements (e.g., spelling, grammar, professionalism) that are not specified in the assignment instructions? If so, tighten up criteria to reflect what’s being assessed.

- Match verbs: Do the verbs used in rubric criteria align with the verbs used in learning outcomes? For example, if a related course learning outcome specifies students will “describe,” does “describe” also appear somewhere in the criteria? Are the assessed criteria at the same Blooms level as the course learning outcomes?

- Clear pass criteria

- Confirm that the rubric clearly states what a pass looks like for each criterion: Is it obvious in the level descriptions what students should demonstrate to pass? That is, does the rubric describe the minimum level of achievement to pass in each criterion? Clarity in descriptions promotes fairness and rigour in grading and mitigates grade inflation.

- Is the pass score clearly indicated in each level according to the respective point values? In other words, what is the minimum score that a student needs to achieve in each criterion to pass according to the passing grade in your course (e.g., 55%, 60% etc.)?

- Confirm that the rubric clearly states what a pass looks like for each criterion: Is it obvious in the level descriptions what students should demonstrate to pass? That is, does the rubric describe the minimum level of achievement to pass in each criterion? Clarity in descriptions promotes fairness and rigour in grading and mitigates grade inflation.

- Appropriate point allocation

- Check that points are appropriately assigned to low-value and high-value criteria: Do you have criteria out of low values, such as 2 or 5? That’s great for criteria that need to be assessed but aren’t worth that much. Watch out, though, for unnecessary granularity of points in low value criteria (e.g., 1.3, 1.4, 1.5 out of 2). Instead, experiment with using fewer levels and thereby points to score low-value criteria, such as a two-point or three-point scale.

- Suitable number of levels

- Verify that the number of levels is appropriate to the complexity of the assessment task: A multifaceted, complex task will require more levels to capture the range of possible achievement. As an example, the 5+2 approach to rubric design includes a “0” level for non-submissions or incomplete tasks and a “Perfect” level to give you more range at the higher ends of the rubric.

- Feedback on clarity

- Get feedback on the clarity of descriptions: Too much detail in descriptions can be overwhelming, but too little detail can lead to vague expectations and open space for bias in grading.

- Get students to weigh in: Ask students to work with the rubric in a practice session (see checklist item #6) or a peer exercise to interpret the rubric and articulate what they understand about expectations. Use this time to clarify assignment instructions with students and make a note to self about adjusting the rubric to improve for future use.

- Another tool to check clarity: Organize a group marking session with colleagues to establish benchmarks for how the rubric is being used. Compare a sample of graded assignments across your sections to understand your differing interpretations of the rubric. Differing interpretations between faculty can reveal inconsistencies and limitations in rubric design.

- Get feedback on the clarity of descriptions: Too much detail in descriptions can be overwhelming, but too little detail can lead to vague expectations and open space for bias in grading.

- Socialize the rubric with students

- Develop a plan for how you will socialize the rubric with your students: Is the rubric presented with the assignment instructions? Do students know where to locate the rubric on eConestoga? Do students understand how to interpret the feedback the rubric provides? Do students have opportunities to practice using the rubric, perhaps in a self-assessment activity?

Copilot provided input on the organization of this post.